GitHub - sayakpaul/tf.keras-Distributed-Training: Shows how to use MirroredStrategy to distribute training workloads when using the regular fit and compile paradigm in tf.keras.

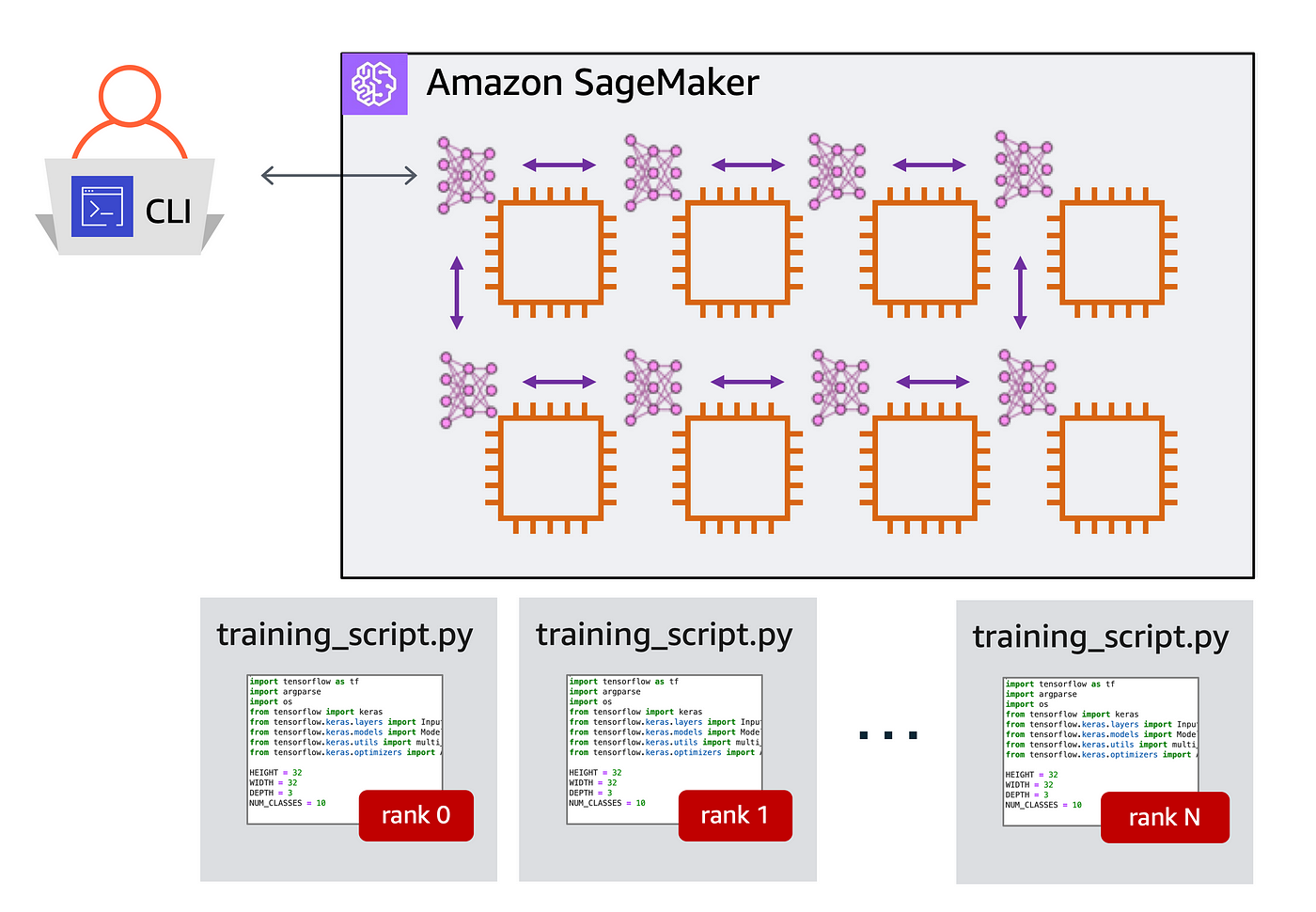

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

Training Keras model with Multiple GPUs with an example on image augmentation. | by Jafar Ali Habshee | Medium

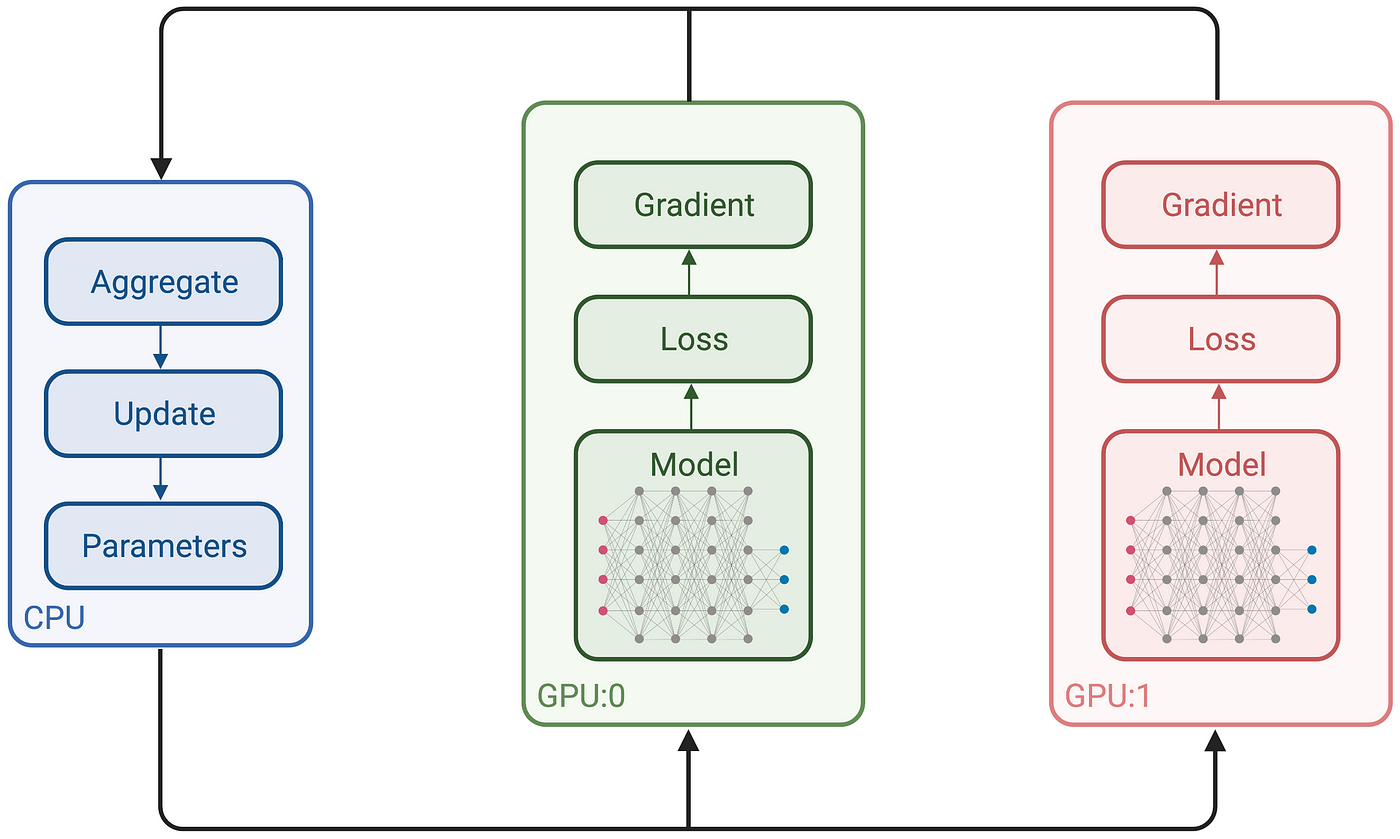

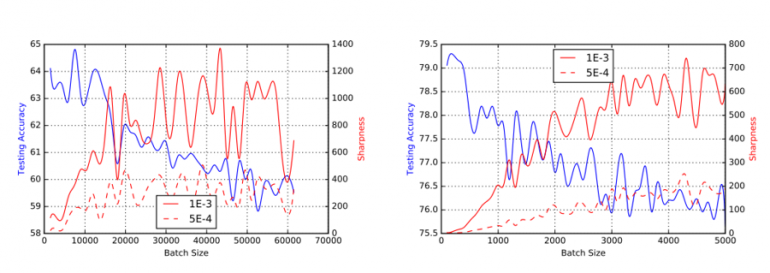

François Chollet on Twitter: "Tweetorial: high-performance multi-GPU training with Keras. The only thing you need to do to turn single-device code into multi-device code is to place your model construction function under