Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

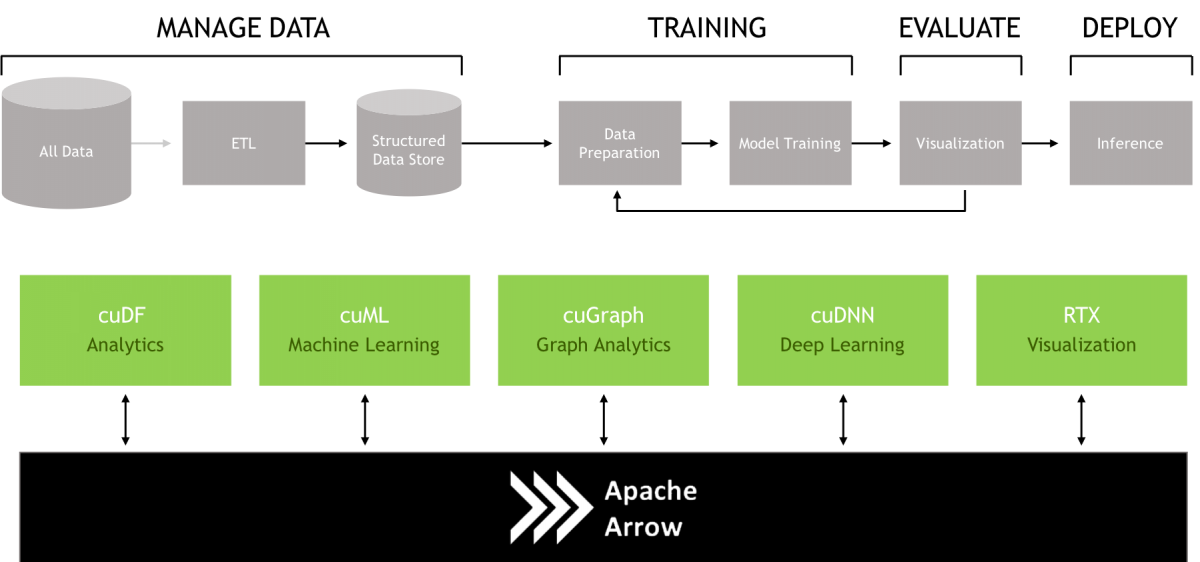

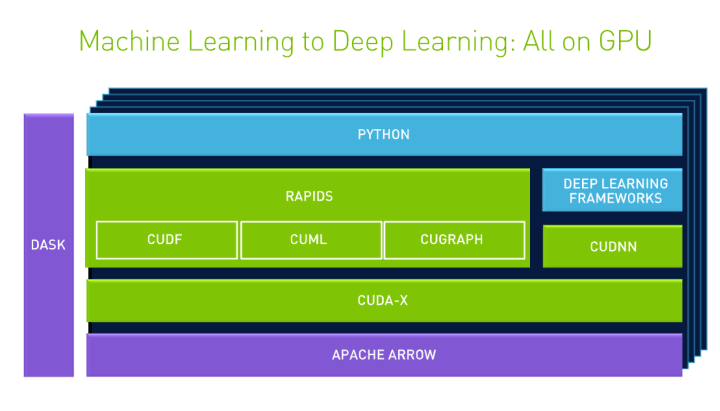

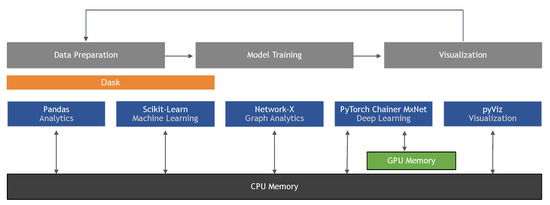

RAPIDS is an open source effort to support and grow the ecosystem of... | Download Scientific Diagram

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

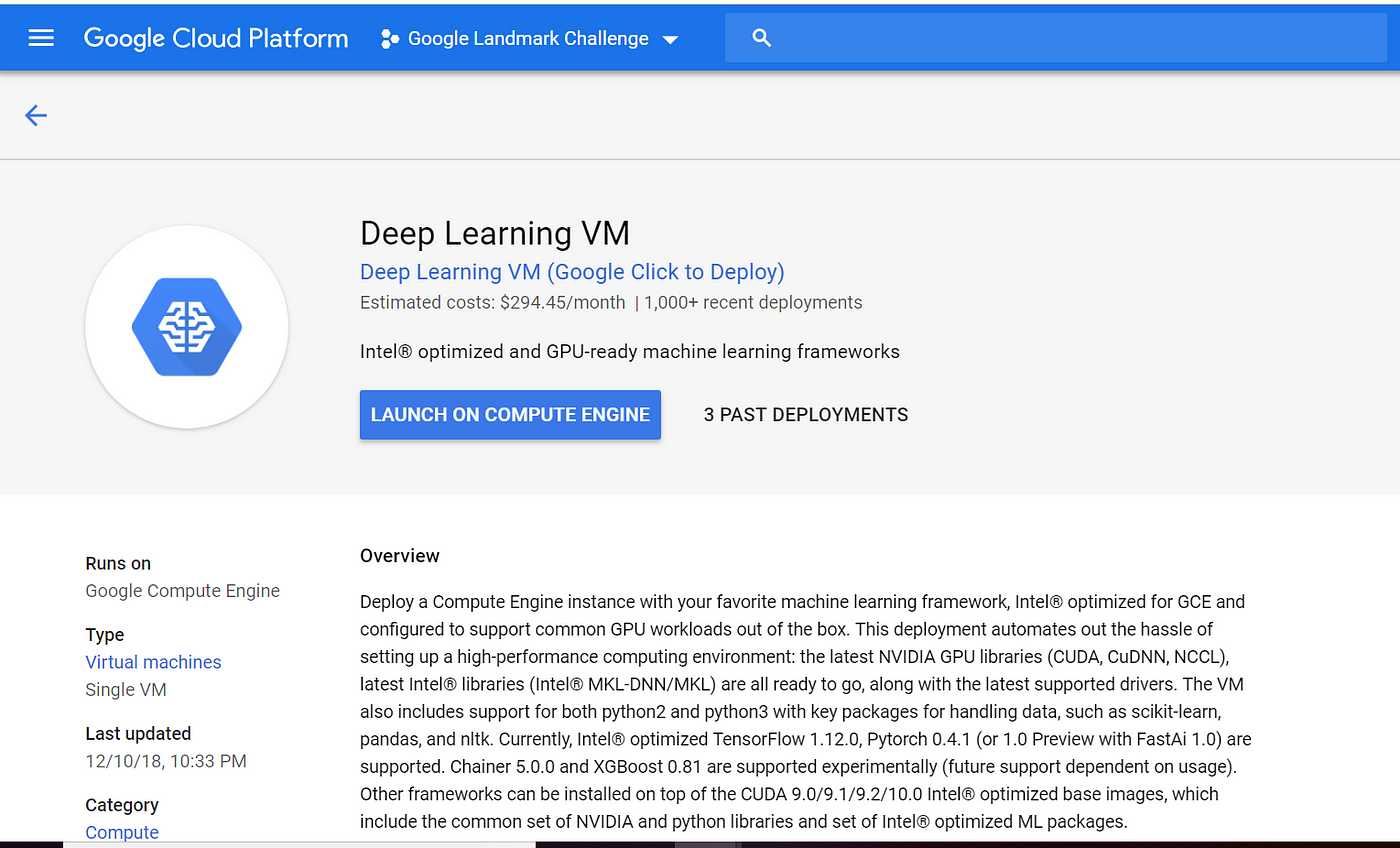

How to run Deep Learning models on Google Cloud Platform in 6 steps? | by Abhinaya Ananthakrishnan | Google Cloud - Community | Medium

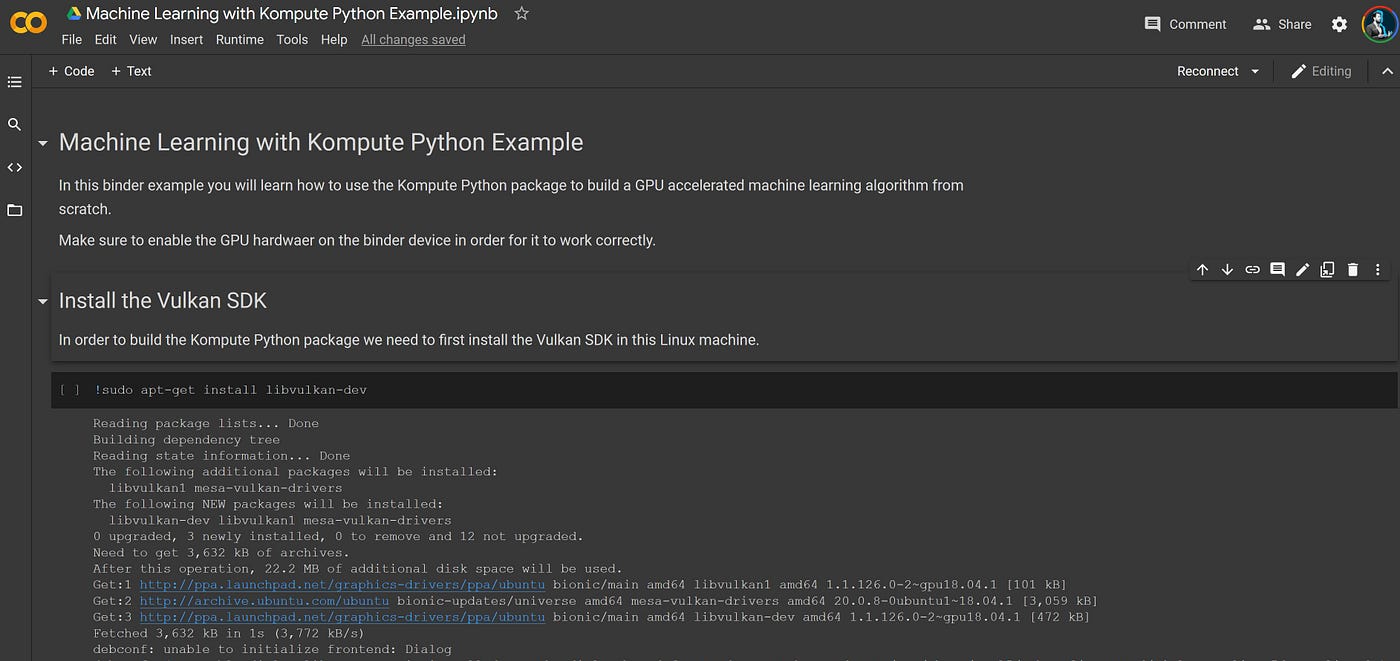

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

![D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning](https://external-preview.redd.it/7lLQAM6QKl67UsD5ElJ1PF7GwjblZnPcXgdqHv64b_A.jpg?width=640&crop=smart&auto=webp&s=03b8c9244d7278f8daf9813ac72d3a76008021bd)